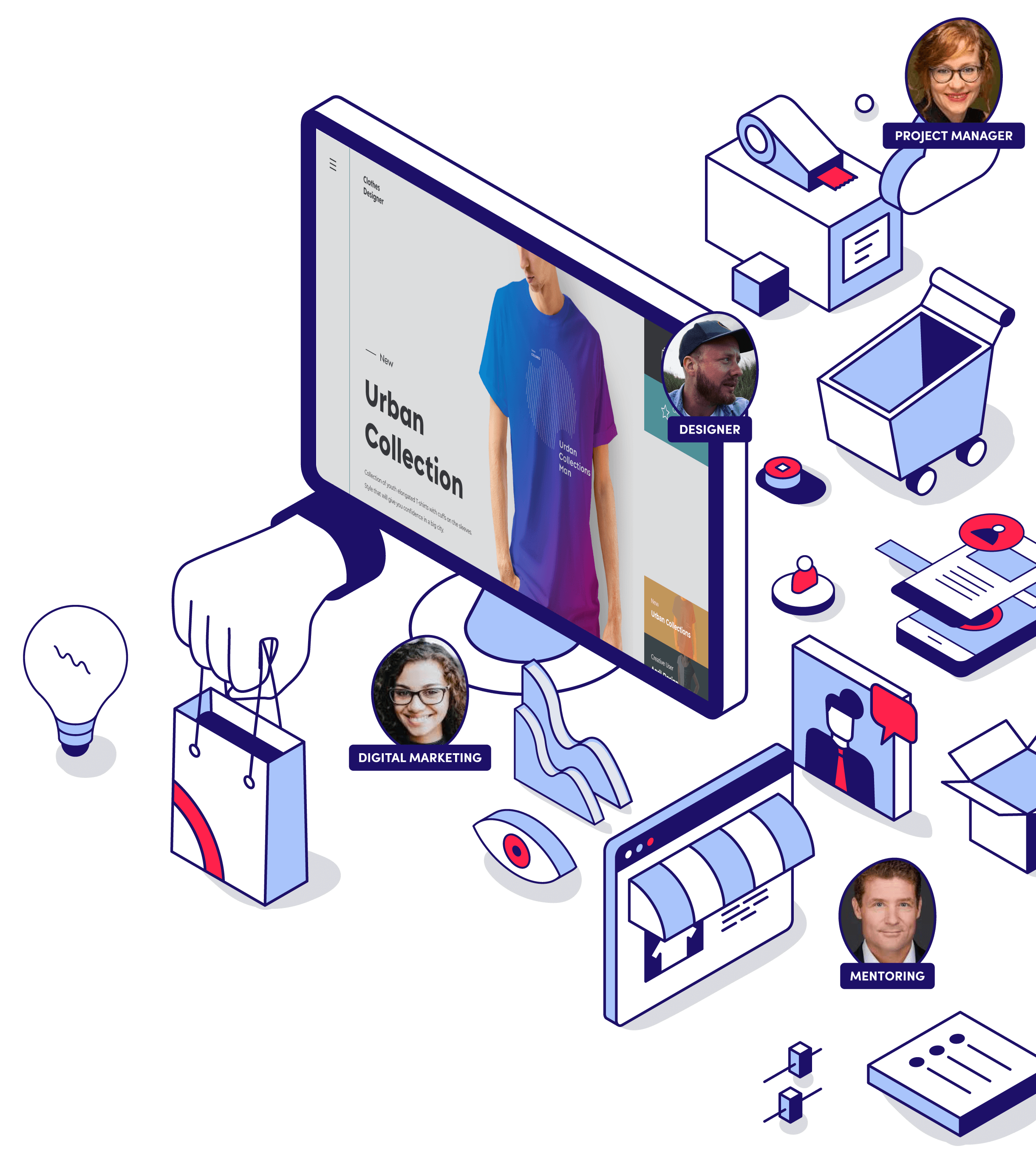

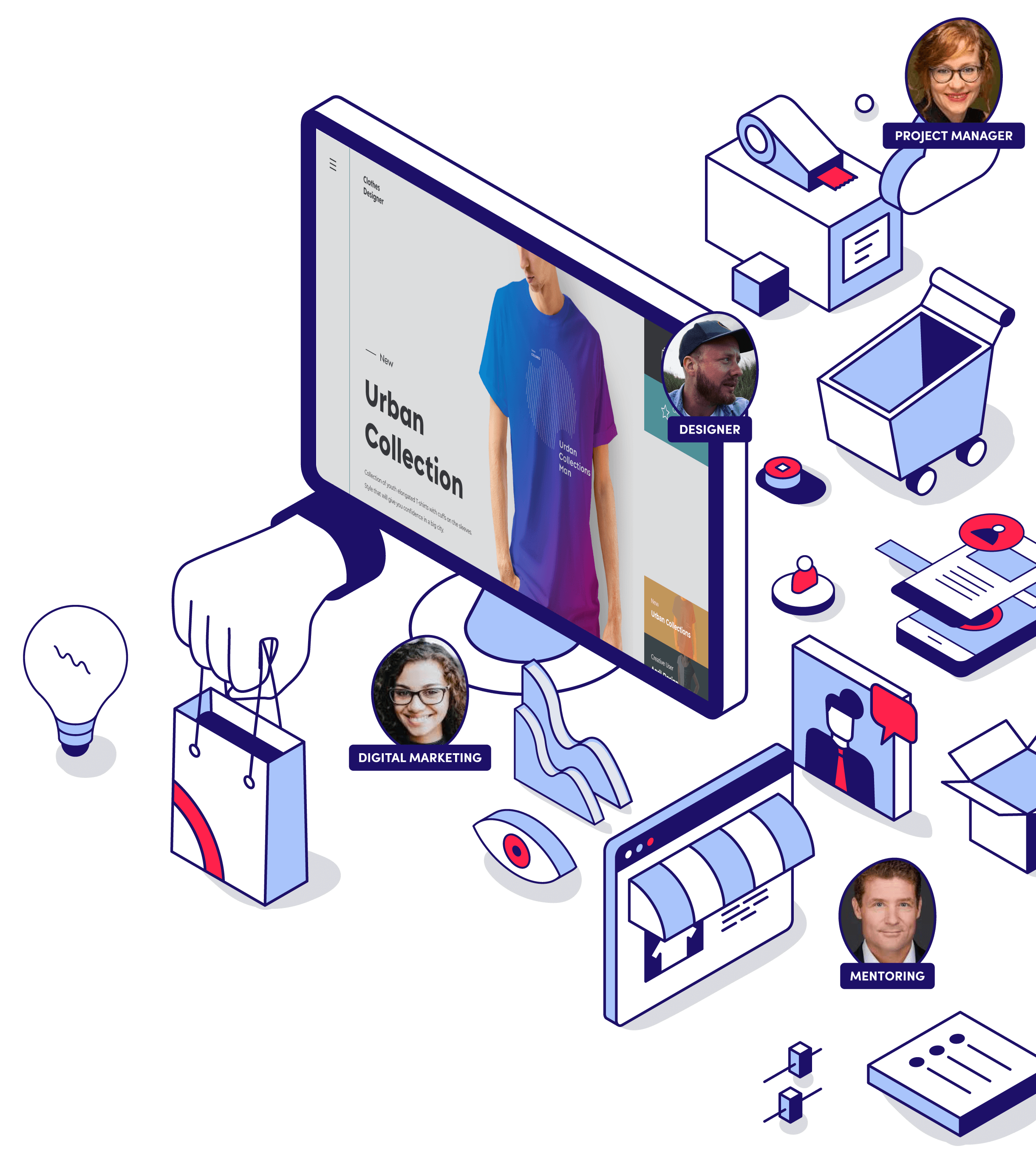

Dive into Flow, where advanced AI meets the expertise of real-world professionals in ecommerce. Our unique platform offers you the smartest AI tools to scale your business, all fine-tuned and managed by seasoned experts in development, design, and digital marketing.

With the best ecommerce minds doing what they do best when you need them most.

Our ecommerce experts can help you harness the power of the world’s best platforms to accelerate your business growth and smash your goals.

Perfect for start-ups and changemakers, our experts lay the foundations for your growth. Need a new Shopify site? Our designers, developers and project managers have got you covered. Looking for support with your product strategy? We have experienced buyers on-demand to put products on your virtual shelves. Struggling to get your business plan looking ship-shape? Let our specialist finance directors guide you to success.

Looking to grow your online business? Join the club. Our ecommerce experts have been there, done that, for brands that you know and love. Increase sales with better qualified traffic with support from our SEO specialists. Get more bang for your buck by engaging with a paid search specialist. Not sure which channels are working? Our digital marketing managers can conduct a full audit and highlight where to pull back, and where to double-down.

Can’t see the wood for the trees? Hire an ecommerce expert for a strategy call and come out of it with a clear growth plan. Our conversion optimisation specialists live and breathe incremental improvements that make a real difference to your bottom line. Need someone to hold your hand or give you a shove in the right direction? We have coaches and mentors ready to challenge you, push you, and help your business succeed.

Our curated list of experts have helped create, manage and grow online businesses just like yours, so what are you waiting for?